In µDialBot our ambition is to actively incorporate human-behavior cues in spoken human-robot communication. We intend to reach a new level in the exploitation of the rich information available with audio and visual data flowing from humans when interacting with robots.

In particular, extracting highly informative verbal and non-verbal perceptive features will enhance the robot’s decision-making ability such that it can take speech turns more naturally and switch between multi-party/group interactions and face-to-face dialogues where required.

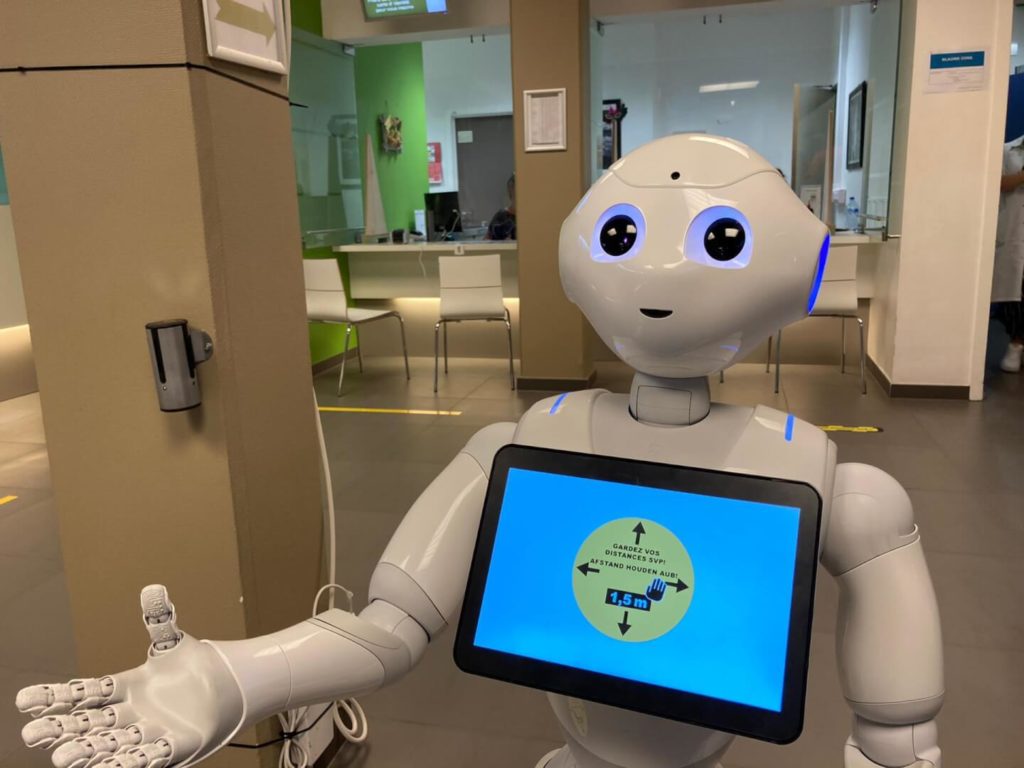

Recently there has been an increasing interest in companion robots that are able to assist people in their everyday life and to communicate with them. These robots are perceived as social entities and their utility for healthcare and psychological well being for the elderly has been acknowledged by several recent studies. Patients, their families and medical professionals appreciate the potential of robots, provided that several technological barriers would be overcome in the near future, most notably the ability to move, see and hear in order to naturally communicate with people, well beyond touch screens and voice commands.

The scientific and technological results of the project will be implemented onto a commercially available social robot and they will be tested and validated with several use cases in a day-care hospital unit. Large-scale data collection will complement in-situ tests to fuel further researches.

Partners

Key persons

Xavier Alameda-Pineda

INRIA

Research Scientist

Perception Team

Olivier Alata

LabHC Univ. Jean-Monnet

Computer Scientist

Computer Vision Team

Samuel Benveniste

CEN STIMCO

Computer scientist,

CTO of Broca Living Lab

Sébastien Da Cunha

AP-HP

Clinical psychologist

Researcher at Broca Living Lab

Timothée Dhaussy

LIA Avignon University

PhD student, Computer scientist

Vocal Interactions Group

Christophe Ducottet

LabHC Univ. Jean-Monnet

Computer Scientist

Computer Vision Team

Florian Gras

ERM Automatismes

Technical project manager & Developper

Radu Horaud

INRIA

Senior Research Scientist

Perception Team

Bassam Jabaian

LIA Avignon University

Computer scientist

Vocal Interactions Group

Hubert Konik

LabHC Univ. Jean-Monnet

Computer Scientist

Computer Vision Team

Prof Fabrice Lefèvre

LIA Avignon University

Computer scientist

Vocal Interactions Group

Anne-Claire Legrand

LabHC Univ. Jean-Monnet

Computer Scientist

Computer Vision Team

Cyril Liotard

ERM Automatismes

CEO

Cécilia Palmer

AP-HP

Clinical psychologist

Researcher at Broca Living Lab

Maribel Pino

AP-HP

Cognitive psychologist,

Head of Broca Living Lab

Prof Anne-Sophie Rigaud

AP-HP

Head of Broca Geriatric Department

Organization

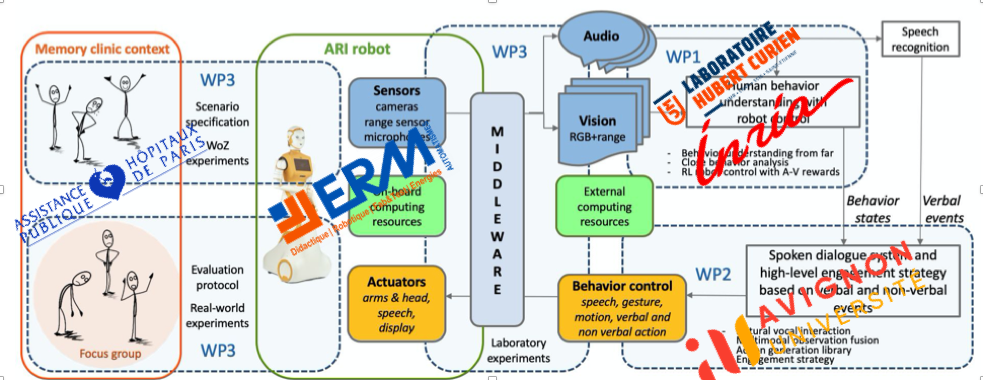

The methodology followed in µDialBot will be at the same time mainstream, in line with the classical methodology to handle new machine learning-based approaches, and pioneering, as novel online learning techniques will be investigated to reduce the requirement for initial data collection to its minimum. Relying on deep learning techniques which have shown their efficiency in other domains, a new formalism dedicated to pro-active perceptually-based control of a conversational robot will be proposed.

For this purpose the general methodology of the project consists of two operational building blocks for:

(i) the estimation of non-verbal states and

(ii) the learning of an event-guided strategy.

These two blocks are integrated on the robotic platform by means of a software abstraction layer. After a first round of “Wizard of Oz” (WoZ) experiments to test the proposed models, the complete system will be gradually introduced in the true clinical context using a well-defined protocol.

Three main work-packages will implement the project’s work programme:

WP1 Human behavior understanding with robot control

Objectives: to develop methods and algorithms to extract HBU cues from audio and visual data.

Design of improved extraction methods which are robust against various perturbations in a real world context and which can provide a quantitative estimation of the reliability of the extracted cues. To develop algorithms for far-range recognition of individual and group activities and close range estimation of individual facial expressions. To develop methods and algorithms for online learning of robot behaviors, leading to robust extraction of close-range HBU cues required by face-to-face communication (WP2).

WP2 Spoken dialogue system and high-level engagement strategy based on verbal and non-verbal events

Objectives: to develop the natural vocal interaction ability of the robot.

On top of it a multimodal decision-making process is developed allowing to combine all verbal and non-verbal event observations with contextual features to define a global behavioral strategy. The strategy will be mostly learned in situ, by means of online training procedure, and will condition the engagement patterns of the robot in its various situations of usage (multiparty, face-to-face).

WP3 Specifications, integration on robotic platform, iterative and final evaluation of the human-robot interactions in a memory clinic

Objectives: to define the experimental protocol, specify laboratory and real-world experimental protocols, and conduct “Wizard of Oz” (WoZ) experiments.

To progressively integrate the software modules of WP1 and 2 and conduct interaction experiments in the laboratories. To evaluate the overall architecture in the clinical context with small groups of patients before the final experiments in the waiting room.

For further information, please contact muDialBot’s coordinator:

For further information, please contact muDialBot’s coordinator: